-

Mechanophore

2021 for virtual and robotic strings and percussion by Scott Barton robotic instruments by WPI’s Music Perception and Robotics Lab and EMMI Mechanophore was inspired by the force-sensitive molecular units of the same name. As mechanophores are subjected to physical forces, they activate chemical reactions that can communicate their state (e.g. color change) or…

-

Tempo Mecho

2019 for the robotic instruments PAM, modular percussion and percussive aerophone (built by WPI’s MPR Lab and EMMI) by Scott Barton A groove changes identity depending on the tempo it inhabits. Typically, there are small ranges within which a rhythm feels at home. Once there, a rhythm reveals the energy, detail and character of its…

-

Parthenope: A Robotic Musical Siren

M. Sidler, M. Bisson, J. Grotz, and S. Barton (2020). Proceedings of the International Conference on New Interfaces for Musical Expression, Birmingham City University, pp. 297–300. full text

-

Mixing AI with Music & Literature

March 4, 2019; by Jessica Messier; The Herd

-

Experiment in Augmentation 1

Spring 2017 human-robot improvisation with Cyther and Modular Percussion (made by the MPR Lab) In the work, a human performer, Cyther (a human-playable robotic zither) and modular percussion robots interact with each other. The interaction between these performers is enabled by both the physical design of Cyther and software written by the composer. The perceptual…

-

Rise of a City

2009 for guitar and robotic ensemble (PAM, MADI and CADI) produced and recorded by Scott Barton, mixed by Marc Urselli and Scott Barton at East Side Sound Studios, NYC Rise of a City introduces a human performer to the robotic creations of EMMI (Expressive Machines Musical Instruments, expressivemachines.com) for the first time. The piece features…

-

Mechatronic Expression: Reconsidering Expressivity in Music for Robotic Instruments

S. Kemper, S. Barton (2018). In Proceedings of the 18th International Conference on New Interfaces for Musical Expression (NIME). Blacksburg, VA.

-

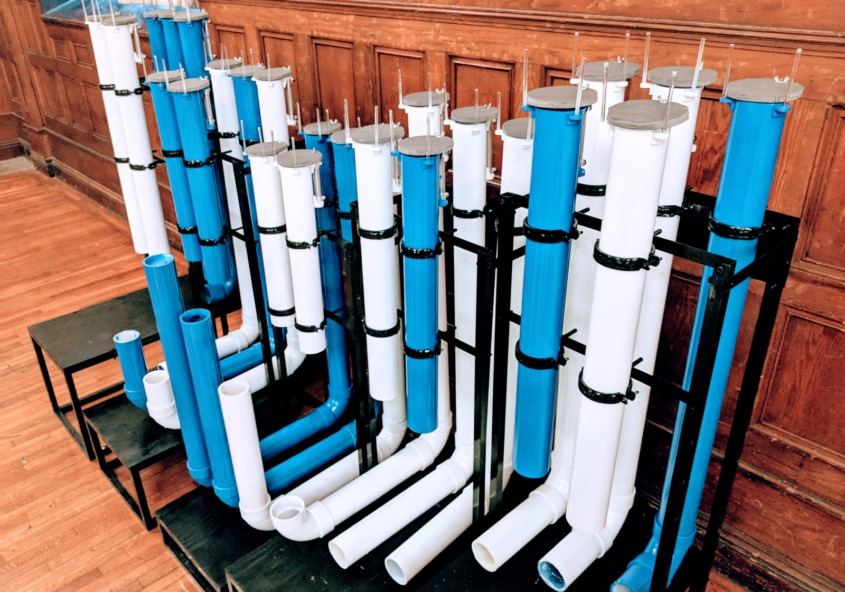

A Robotic Percussive Aerophone

K. Sundberg, S. Barton, A. Walter, T. Sane, L. Baker, A. O’Brien (2018). In Proceedings of the 18th International Conference on New Interfaces for Musical Expression (NIME). Blacksburg, VA. full text

-

Musical Robotics presentation at Music and the Brain Seminar

April 3, 2018; WPI; Worcester, MA